Five architectural changes to be aware of in Tridion Sites 9.6

- Semantic AI

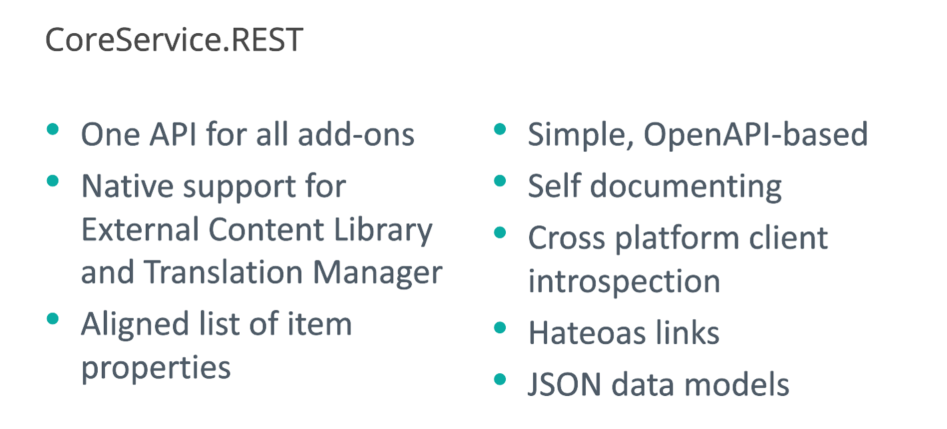

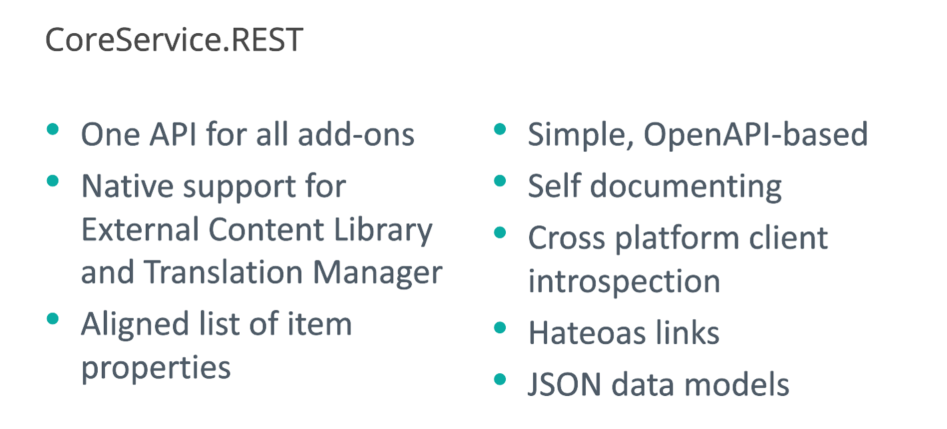

- Core Services .REST

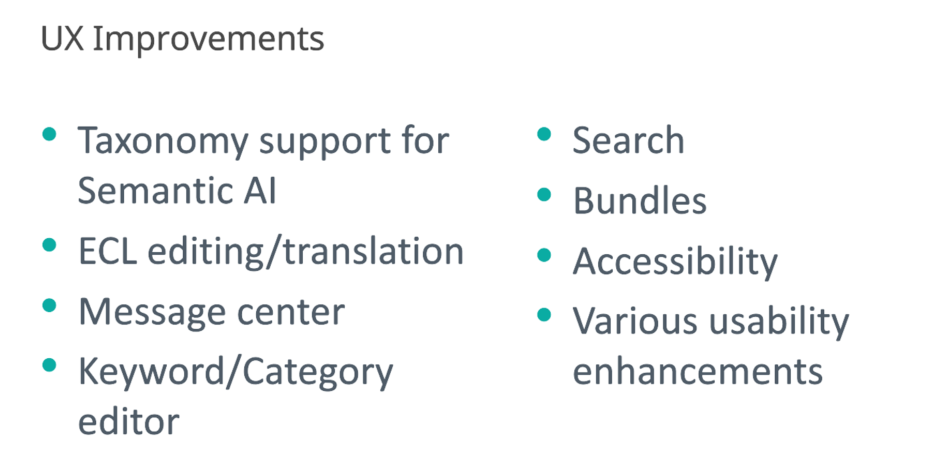

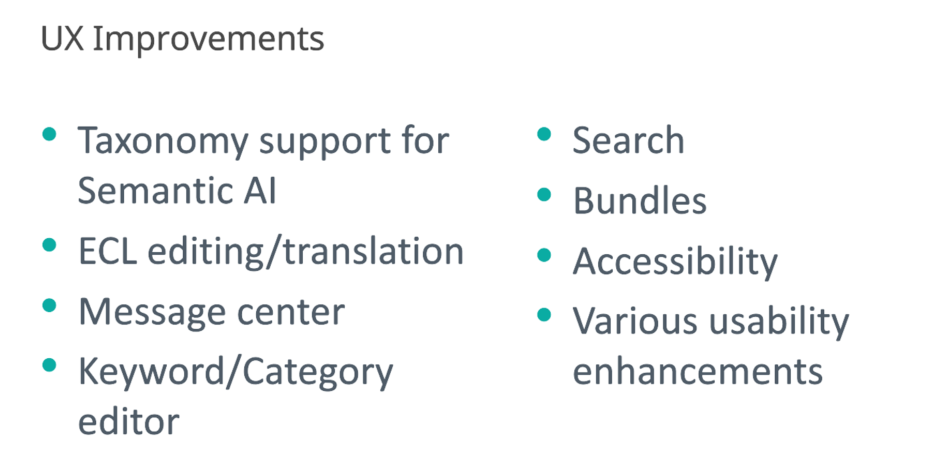

- UX Improvements

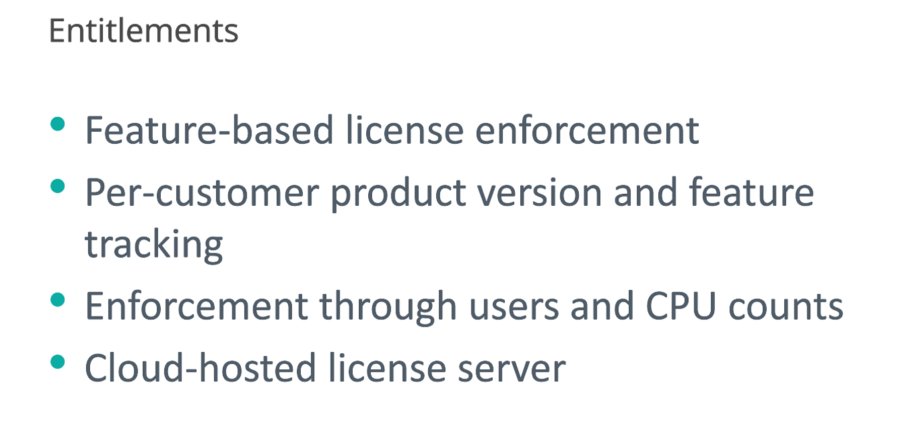

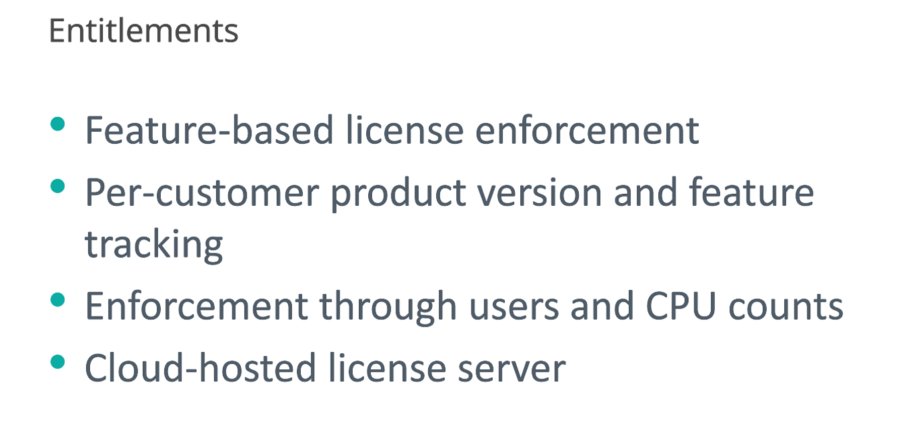

- Entitlements

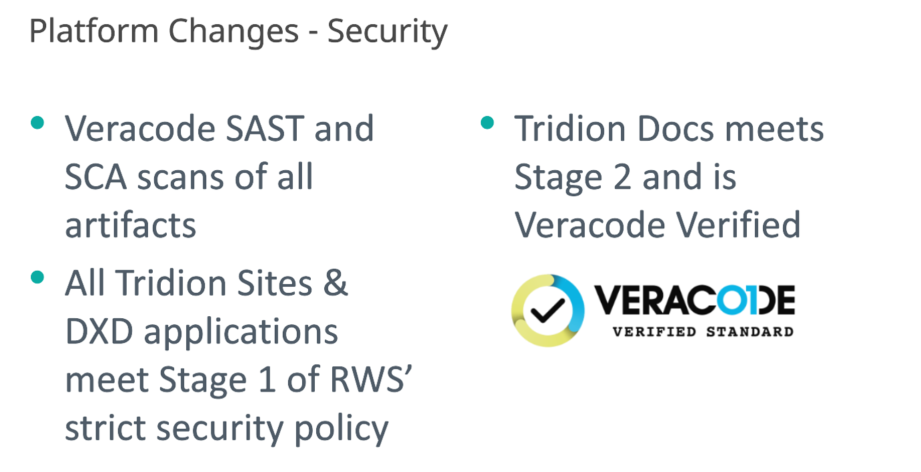

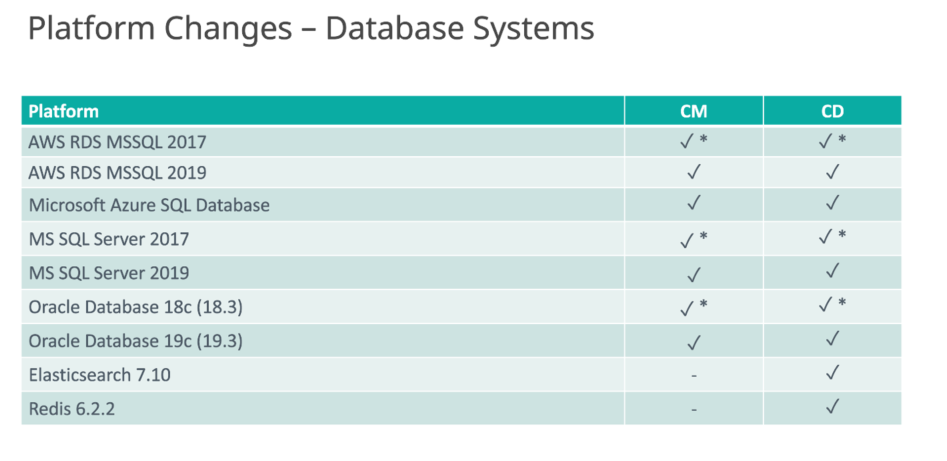

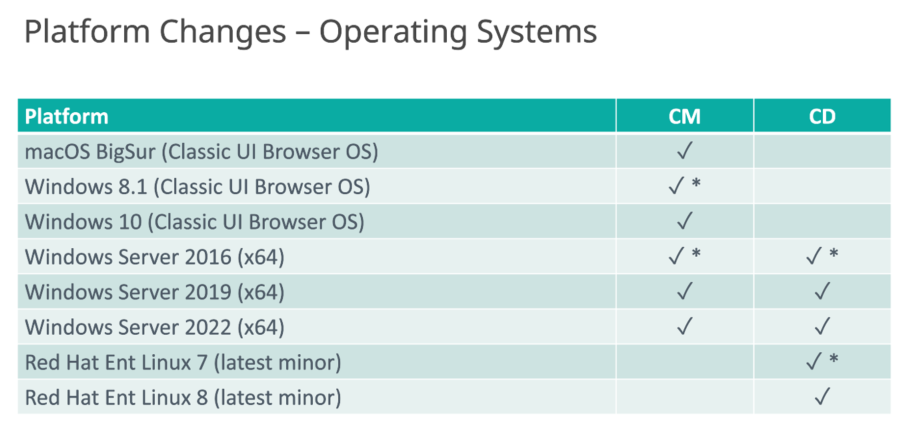

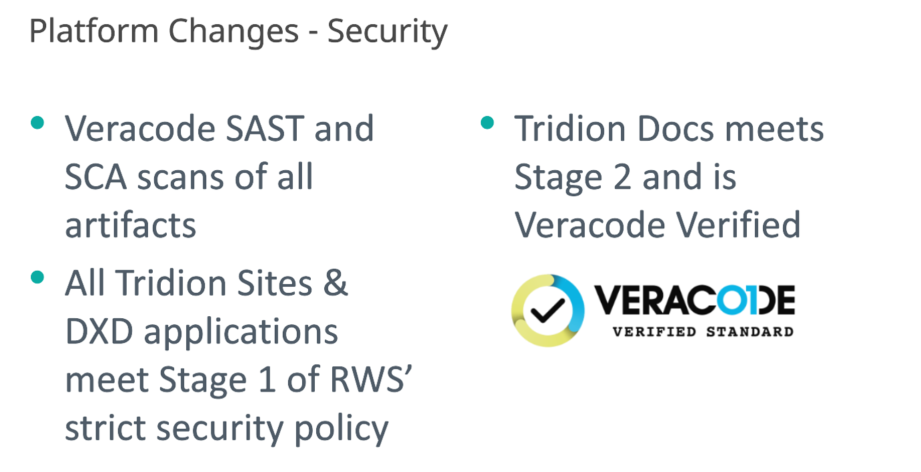

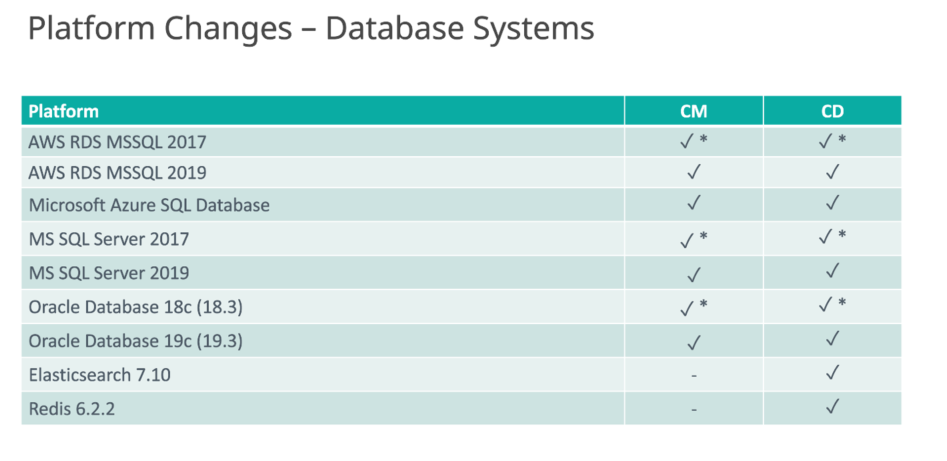

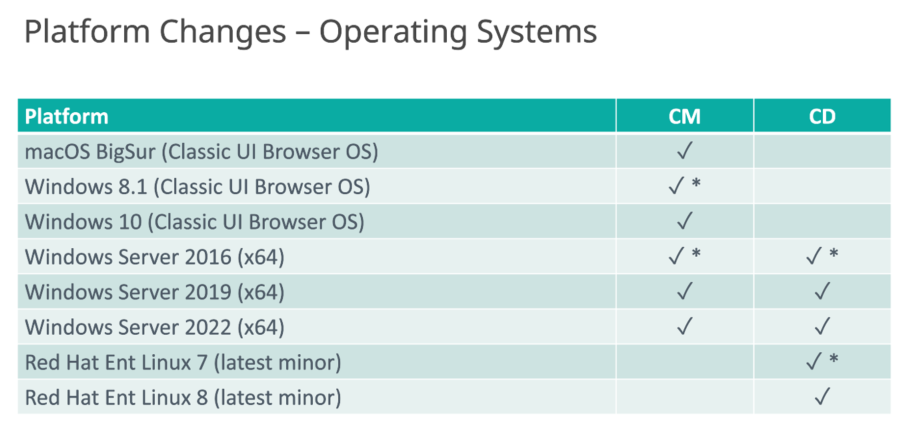

- Platform Changes

Content Manager

Five architectural changes to be aware of in Tridion Sites 9.6

The steps below can be used to decrypt the file.

1. Backup the file C:\Program Files (x86)\SDL Web\config\Tridion.ContentManager.config

2. Rename the Tridion.ContentManager.config file to web.config

3.Open a command prompt as Admin

4. Navigate to

%SYSTEMROOT%\Microsoft.NET\FRAMEWORKFOLDER\VERSIONFOLDER

for example C:\Windows\Microsoft.NET\Framework64\v4.0.30319

5. Run command to decrypt a given section (eg. database, “tridion.security”, search or searchIndexer)

aspnet_regiis.exe -pdf database “C:\Program Files (x86)\SDL Web\config”

6. Verify the file is decrypted by opening web.config in a text editor and reviewing the section decrypted, the settings and values should be clear

7. Rename the web.config file back to Tridion.ContentManager.config

8. To re-encrypt the file, follow the steps above and use the command

aspnet_regiis.exe -pef database “C:\Program Files (x86)\SDL Web\config” -prov TridionRsaProtectedConfigurationProvider

9. Restart IIS.

This file is typically encrypted. Specific sections can be encrypted and decrypted again.

These are:

– database

– tridion.security

– search

– searchIndexer

You may wish to secure your microservice using Secure Socket Layer (SSL), so that an HTTPS connection is required to interact with the service. Refer to SDL documentation for more details Link

You need Open SSL for generating key store so you can use following tools

For more information about OpenSSL and the openssl command line tool, refer to https://www.openssl.org/docs/man1.0.2/apps/openssl.html.

You can use CYGWIN – https://www.cygwin.com/

Step 1) Generate the PFX file using IIS or you can generate using Windows start menu, type mmc and open it n the Console window, in the top menu, click File > Add/Remove Snap-in. Please refer to the Link. Please keep a note of the password.

Step 2) Generate ( BAse-64 encoded X.509(.CER) File using IIS

Step 3) Use one of the OPEN SSL tool to execute following commands

Step 4) GENERATE keystore ( microservices.key using the PFX file)

openssl pkcs12 -in /home/user/tridion_services-o.pfx -nocerts -out microservices_o.key

enter the same password that was created during PFX export

Enter Import Password:

Enter PEM pass phrase:

Verifying - Enter PEM pass phrase:

Step 5) Run the following OpenSSL tool command to turn your private key and CA certificate into an export file:GENERATE P12 file using ( cer and keystore)

$ openssl pkcs12 -export -in /home/user/tridion_services-o.cer -inkey /home/user/microservices_o.key -name "cds-microservices_o" -out /home/user/microservices_o.P12

Enter pass phrase for /home/user/microservices_o.key:

Enter Export Password:

Verifying - Enter Export Password:

Step 6) Copy the files generated under ( /home/user) to a different directory – example D:\Cert

Step 7) The keytool command line tool is included with Java. For more information about this tool, refer to http://docs.oracle.com/javase/7/docs/technotes/tools/windows/keytool.html.

Step 8) Add C:\Program Files\Java\jdk1.8.0_271\bin ( Example )To the PATH ( this will allow you to run the keytool command from any path

Step 9) Run the following keytool command (on one line) to import your file into a keystore file:

D:\Cert>keytool -importkeystore -deststorepass ***** -destkeypass Welkom01 -destkeystore cds-microservices_o.JKS -srckeystore microservices_o.P12 -srcstoretype PKCS12 -srcalias cds-microservices_o

Importing keystore microservices_o.P12 to cds-microservices_o.JKS...

Enter source keystore password:

Step 11) add the keystore to the Java runtime security cacerts

Step 12) before adding the keystore to the Java runtime security cacert you can List all the certs added to the Java runtine using following command

keytool -keystore "C:\Program Files\Java\jre1.8.0_271\lib\security\cacerts" -storepass changeit –listStep 13) Import the cert to the Java runtime security , plesae note chageit is the default password

keytool -importcert -file tridion_services-o.cer -alias tridion_services-o -keystore "C:\Program Files\Java\jre1.8.0_271\lib\security\cacerts" -storepass changeitStep 14) copy the JKS file to installed tridion micoservices folder example : D:\SDL_TridionServices\Cert

Step 15) If you have installed the micro services with HTTP , you can un install the service

Step 16)

go to D:\SDL_TridionServices\Discovery\config and Edit application.properties

Add following Entry

server.ssl.enabled=true

server.ssl.protocol=TLS

server.ssl.key-alias= cds-microservices_o

server.ssl.key-store= D:/SDL_TridionServices/Cert/cds-microservices_o.JKS

server.ssl.key-password=****

Step 17) go to D:\SDL_TridionServices \Discovery\config and Edit cd_storage_conf.xml and change the discovery URL with new domain and https for both discovery and token service URL

Step 18) Install the Discovery Service again if you have installed service with HTTP or you can do a fresh install of the discovery service

Step 19) open windows command and run the discovery register command – from go to D:\SDL_TridionServices \Discovery\config

Java –jar discovery-registration.jar read

Java –jar discovery-registration.jar update

Step 20) Generate CER file from the Root Certificate if you multi level certificate – access the HTTPS discovery URL using the browser on the Content Delivery Server and open the certificate and go to the details and copy the file and Select Base-64 encoded X.509(.CER) – export the CER successfully

Step 21) Convert CER to CRT using OPEN SSL command – for multi level root certificate

$ openssl x509 -inform PEM -in "/home/user/Root_CA.cer" -out /home/user/Root_CA.crt

$ openssl x509 -inform PEM -in "/home/user/Sub_Root_CA.cer" -out /home/user/Sub_Root_CA.crtStep 22) add the CRT file to the Java runtime security cacerts on the Content Manager Server

Step 23) Logon to the content manager server using TridionAdmin User and add the JRE to the Environment Variables PATH à C:\Program Files\Java\jre1.8.0_271\bin

Step 24) Copy the crt and cer files from C:\cygwin64\user\ to : D:\Cert

Step 25) go to D:\Cert and run the following command

D:\Cert>keytool -importcert -file Root_CA.crt -alias Root_CA -keystore "C:\Program Files\Java\jre1.8.0_271\lib\security\cacerts"

D:\Cert>keytool -importcert -file Sub_Root_CA.crt -alias Sub_Root_CA -keystore "C:\Program Files\Java\jre1.8.0_271\lib\security\cacerts"

Enter keystore password:changeit

Enter “yes”to trust the certificate Step 26)

Verify if the certificate is added

keytool -keystore “C:\Program Files\Java\jre1.8.0_271\lib\security\cacerts” -storepass changeit -list

Step 27) Access the Discovery and Deployer HTTPS URL from Content Manager Server

Step 28) Transport Service changes on Content Manager Server go to D:\SDL Web\config or to the Tridion Installed Path and backup cd_transport_conf and add the following

<Sender Type="DiscoveryService" Class="com.tridion.transport.connection.connectors.DiscoveryServiceTransportConnector">

<KeyStore Path="C:\Program Files\Java\jre1.8.0_271\lib\security\cacerts" Secret="changeit" />

</Sender>

Step 29) Restart the transport and publisher Service

Step 30) Updating the Topology https discovery URL

Get-TtmCdEnvironment ( Get All Content Delivery Environment from Topology)

Set-TtmCdEnvironment -ID StagingCdEnvironment -DiscoveryEndpointUrl “https://localhost:8082/discovery.svc” -EnvironmentPurpose “Staging” -AuthenticationType OAuth -ClientId cmuser -ClientSecret ‘*****’

***** IF YOU GET FOLLOWING ERROR ************************

The underlying connection was closed: An unexpected error occurred on a receive.

The client and server cannot communicate, because they do not possess a common algorithm

Solution : refer à http://hem-kant.blogspot.com/2018/07/sdl-web-and-tls-12-or-higher.html

[HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\.NETFramework\v4.0.30319]”SchUseStrongCrypto”=dword:00000001

[HKEY_LOCAL_MACHINE\SOFTWARE\Wow6432Node\Microsoft\.NETFramework\v4.0.30319]”SchUseStrongCrypto”=dword:00000001

Please find some of the suggestions while setting up SDL Tridion Sites.

Please note the following suggestions will vary for different Customers and based on implementation. Please Contact SDL for specific questions.

| Component | Suggestions | |

| Content Manager | ||

| Suggested to setup Master/Slave setup Refer to Search system diagram (outscale) Link | ||

| Uploads to the Core Service for scaled out setup | Suggested to setup to store the images in either shared folder or S3 bucket. Configuring a shared network temporary location for uploads to the Core Service Link | |

| Content Porter – Import / Export service for scaled out setup | Suggested to configure this setup Configuring high-availability storage for Import Export service items Link | |

| Content Delivery | ||

| Content Deployer Service | Suggested to keep more memory rather than more CPU, to achieve the mass publishing requirement | |

| Recommended to exclude the binaries from cache, because it consume the memory quickly | ||

| Suggested to keep the state store database separately It also helps to improve the performance of broker database and syncing of database between the environments | ||

| Suggested to setup load balancer for deployer and setup both servers’ configurations as identical, and store the packages in common shared folder, eg EFS And use the load balancer endpoint with discovery registration. Turn off the 2nd deployer service. In case first deployer service down or crashed, then just switch on the 2nd deployer server it will without any manual configuration changes. | ||

| Content Service | Recommended to exclude the binaries from cache, because it consume the memory quickly | |

| CMS Load Balancer | Suggested to keep minimum 60 mins or higher. | |

| CMS Load balancer endpoint mandatory to set sticky cookie to use the Health check protocol HTTPS AND 443 with status code 200 | ||

| Database | Suggested to set up 2 scheduled jobs Job 1 – scheduled to run every 4hr with only update statistics (it can be decided based on how often content update and publishing happens) Job 2 – scheduled to run every day in midnight with both reindex and update statistics, ensure there is no much traffic during the time. Recommended to run at ideal time not peak time. | |

| ActiveMQ | Suggested to setup HA on premises or recommended to use AWS ActiveMQ | |

| For instances storages eg. HDD/Shared EFS | Recommended to keep the High I/O performance for better performance | |

| Session content service | Enable object caching but disable the webapp caching and CIL caching in modelservice | |

| Credentials | Recommended to encrypt all the credentials in the configs |

SDL Tridion Sites 9.5 marks a major step towards a fully rejuvenated user experience for SDL Tridion practitioners, while also including improvements in Search and Content Delivery.

Experience Space – our new and improved user interface

How the Dynamic Experience Delivery component has been improved with better search and easier headless publishing

How the functional and technical features of each release compare in simple summary tables

Please refer to the community link for the announcement

What’s new in SDL Tridion Sites 9.5

Refer to my blogs Link for more details and you can download the datasheet from our website refer to link

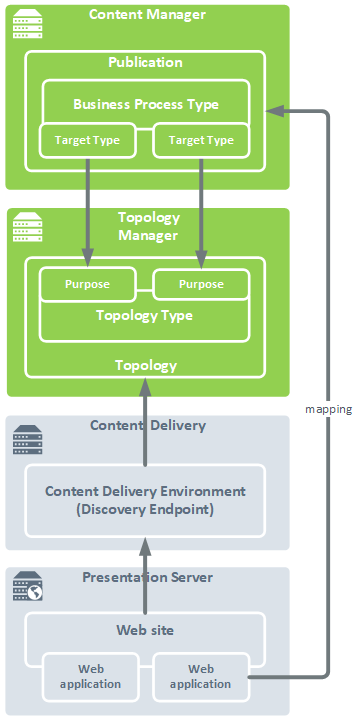

Topology Manager forms the bridge between the Content Manager and Content Delivery environments. By decoupling the two environments, it becomes easy to swap out environments on either side, and to separate concerns by specific roles in your organization.

Topology Manager maps logical publishing targets selected by the end user to physical publishing destinations on the presentation environments. Because Topology Manager is a separate software component from Content Manager, it makes it easy for you to extend the delivery environment to include mode endpoints, or to move your delivery environment into the Cloud.

Topology Manager has no clients or APIs, only a database. The user interface consists of a number of PowerShell cmdlets.

Topology Manager concepts

Use Topology Manager to communicate information between a Content Manager environment on the one hand, and one or more Content Delivery environments on the other hand.

Web application

At the most basic level, each publishable Publication in Content Manager maps to a Web application. Multiple Publications can map to the same Web application. Each Web application is part of a Web site, and is represented by an ID and a context URL (relative to the Web site’s base URL).

Web site

A Web site has an ID and a base URL. One Web site contains one or more Web applications.

Content Delivery environment

A Content Delivery environment, identified by an ID, and accessible through a Discovery Endpoint URL and a set of credentials, represents a number of Content Delivery Capabilities and a collection of one or more Web sites, hosted on one or more Presentation Servers.

Purpose

Every Content Delivery environment has a Purpose. A Content Delivery environment’s Purpose makes clear to what end the environment is being used.

For example, an environment with a Purpose called “Staging” is intended to be used as a staging environment; an environment with a Purpose called “Live” is intended to be used as a live environment.

Topology

A Topology defines a complete publishing environment: it consists of a set of Content Delivery environments, each with a distinct Purpose. A Topology is a concrete instance of a Topology Type. For example, given a Topology Type that defines two Purposes “Live” and “Staging”, a Topology defines what the words “Live” and “Staging” actually stand for. The Topology must specify one Content Delivery environment for each of the Purposes defined in its Topology Type.

If your organization has multiple publishing environments, it will also have multiple Topologies. For example:

Your implementation supports different definitions for “Live” and “Staging” that depend on what is being published. For example, in some situations you may want to define “Staging” and “Live” as Content Delivery environments in the cloud, while in other situations “Staging” and “Live” might refer to on-premises Content Delivery environments. In this scenario, you would define one Topology Type that defines the Purposes “Staging” and “Live”, and two Topologies, one used for on-premises publishing and another for cloud publishing.

Another example might be that, depending on regulatory concerns or to optimize performance, you want the servers associated with “Staging” and “Live” environments to reside in a specific geographic location. In this case, you would again have one Topology Type defining “Staging” and “Live”, and any number of Topologies, one for each geographic location.

If you have multiple Topologies defined for a Topology Type, the mapping from the Publication to the Web application determines which of the Topologies content gets published to.

Topology Type

A Topology Type is a list of Purposes, that is, a list of the types of environment you can publish to. Content may pass through a number of environments before going live, depending on the publishing workflows your organization supports.

For example, for legally sensitive content, your organization may require one staging environment for editorial review, and another for legal review. This would result in a Topology Type with three Purposes: “Staging Editorial”, “Staging Legal” and “Live”.

At the same time, content that requires no legal review may require only one staging environment, which would entail another Topology Type, with only two Purposes: “Staging” and “Live”.

Business Process Type

In Content Manager, a Business Process Type represents a number of settings that together define a certain type of business process in your organization. Put simply, it defines how content is allowed to flow through your organization, both while it is being managed and when it gets published. In the context of publishing, a Business Process Type refers to a Topology Type and contains a number of Target Types (as many as the Topology Type has Purposes). Each publishable Publication must have one and exactly one Business Process Type associated with it.

Target Type

Target Types are the items that the end user selects when publishing (or unpublishing) content. Each Target Type has a Purpose. Target Types would typically be named after their Purpose, so they would be “Live” and “Staging”. Note that the physical location where the content will end up depends not only on the Target Type(s) selected, but also on the Publication from which the publish action takes place.

In the Business Process Type, Target Types can be given a Minimal Approval Status, meaning that publishing to that Target Type is impossible until it has reached at least the Approval Status specified; and they can be given higher or lower priority than the default, to accelerate publishing to a live environment when possible, or delay publishing to a staging environment when necessary. You can also restrict the ability to publish to a Target Type to a certain subset of your users.

# Create Topology Type

EnvironmentPurposes : Define Staging and Live Target.

Add-TtmCdTopologyType -ID DemoTopologyType -Name "Demo Topology Type" -EnvironmentPurposes @("Staging", "Live")

# Create Content Delivery Environment ( Staging )

Add-TtmCdEnvironment -ID DemoStagingCdEnvironment -DiscoveryEndpointUrl https://localhost:8082/discovery.svc -EnvironmentPurpose Staging -AuthenticationType OAuth -ClientId cmuser -ClientSecret '******'

# Create Content Delivery Environment ( Live )

Please make sure the dicovery port is different if you are running on the same machine

Add-TtmCdEnvironment -ID DemoLiveCdEnvironment -DiscoveryEndpointUrl https://localhost:8082/discovery.svc -EnvironmentPurpose Live -AuthenticationType OAuth -ClientId cmuser -ClientSecret '*****'

# Create Topology

Add-TtmCdTopology -Id DemoTopologyType -Name "Demo Topology" -CdTopologyTypeId DemoTopologyType -CdEnvironmentIds @("DemoStagingCdEnvironment", "DemoLiveCdEnvironment")

# Create Website

Add-TtmWebsite -Id Staging_Website -CdEnvironmentId DemoStagingCdEnvironment-Baseurls @("https://demo.staging.com")

Add-TtmWebsite -Id Live_Website -CdEnvironmentId DemoLiveCdEnvironment-Baseurls @("https://demo.live.com")

# Create Mapping

Add-TtmMapping -Id EN_STAGING_Mapping -PublicationID "tcm:0-40-1" -WebApplicationId DemoStaging_Website_RootWebApp -RelativeUrl /en;

Add-TtmMapping -Id ES_STAGING_Mapping -PublicationID "tcm:0-41-1" -WebApplicationId DemoStaging_Website_RootWebApp -RelativeUrl /es;

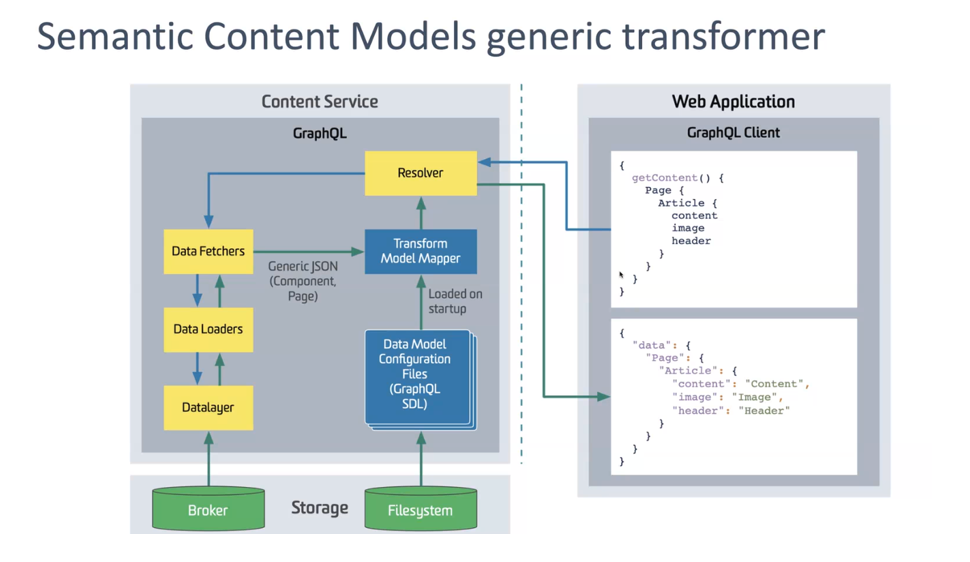

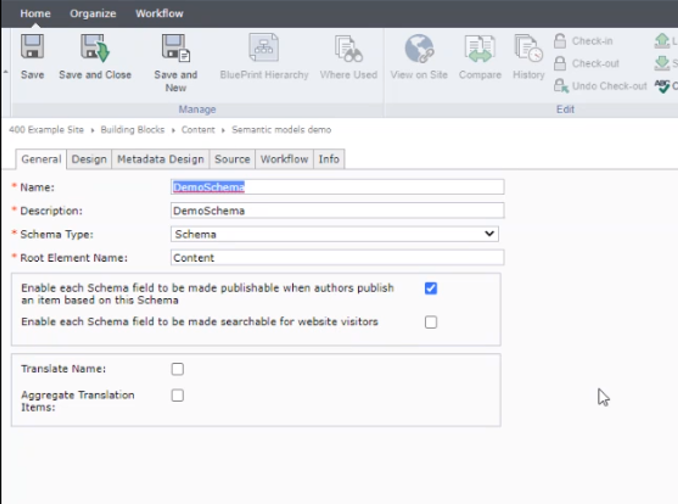

Semantic Content Models

.

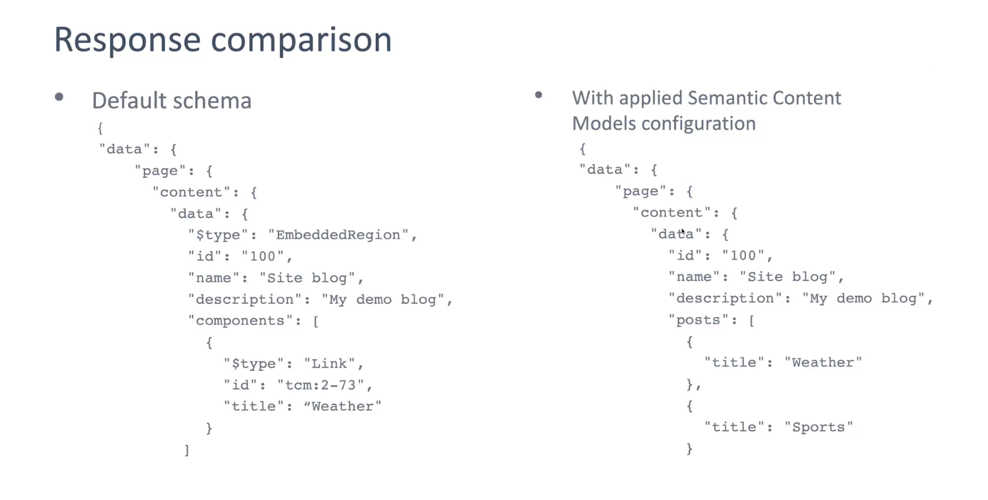

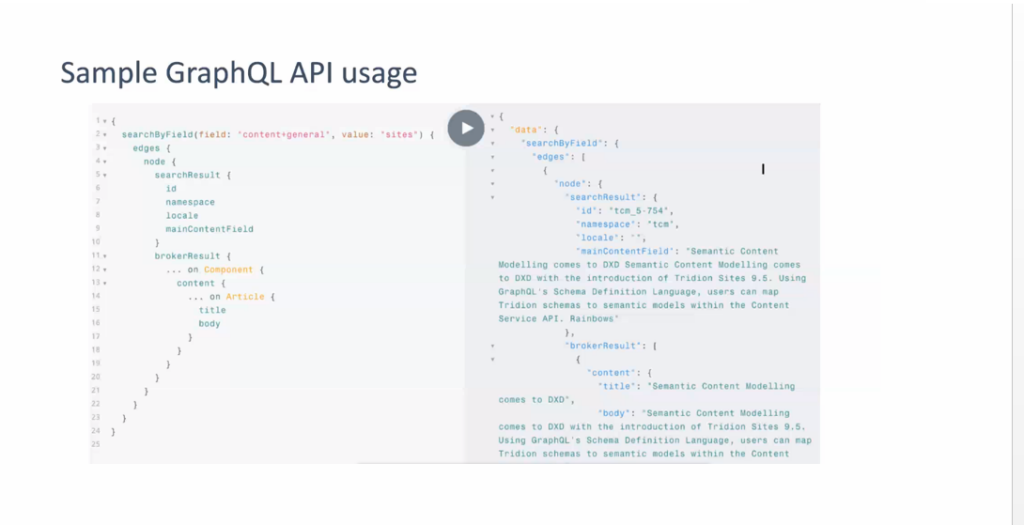

Semantic Content Models’ allows you to define a mapping between the Tridion content fields and how they are queried through the GraphQL API.

This abstraction mechanism removes dependencies between the two, so that future changes to your content model don’t directly impact your front-end applications. As a result, your front-end developers can build engaging digital experiences more easily, without needing to know the inner workings of SDL Tridion

type Post {

id: String!

title: String!

publishedAt : DateTime!

likes: Int! @default(value: 0)

blog: Blog @relation(name:"Posts")

}Allow customers to query filter using familiar concepts ( for example : Article , News,Post,Blog etc.)

type Blog {

id: String!

name: String!

description:String

posts: [Post!]! @relation(name: "Posts")

}Semantic Content Models in DXD

Semantic content models exposed natively in the content API

page(namespaceId: 1, publicationId:2 , pageId:76 {

content {

... on UntypedContent {

data {

}

}

}

Refined GraphQL API to support custom schema types within existing root queries ( Items,component,page

page(namespaceId: 1, publicationId:2 , pageId:76 {

content {

... on Post {

id

title

}

}

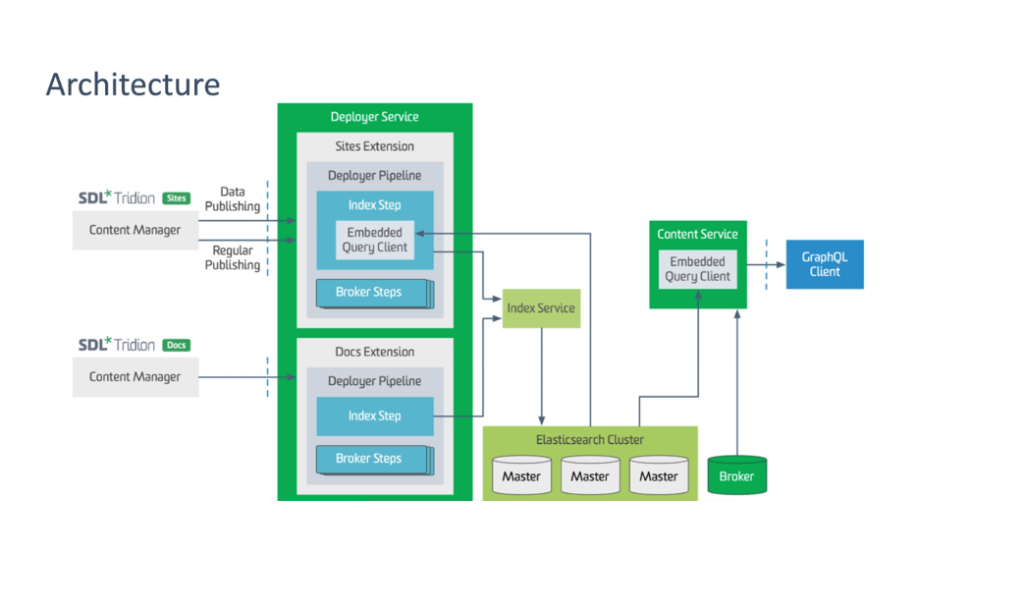

Why SDL Tridion Sites Search

.

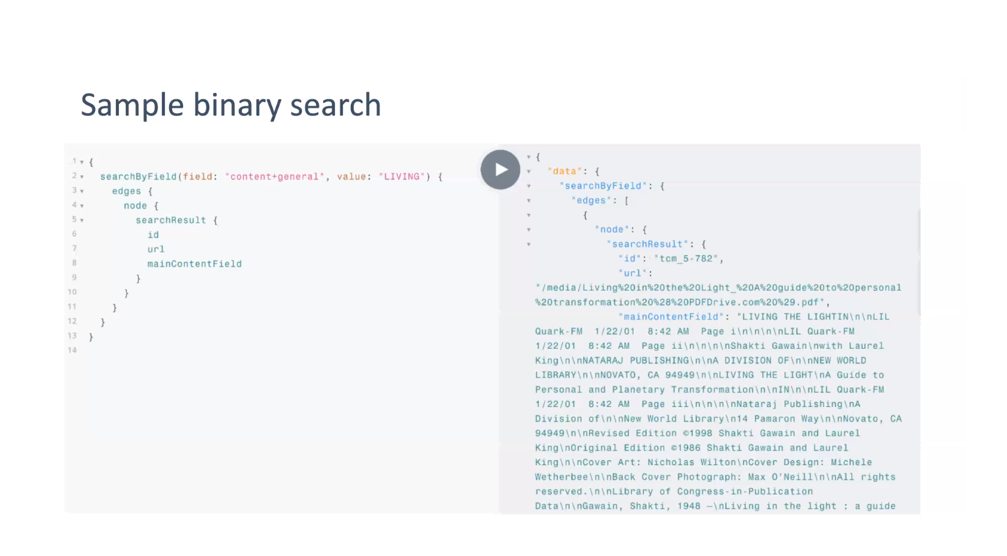

SDL Tridion Sites now ships with out-of-the-box support for Elasticsearch-based search for published content, helping you find published content within pages, components and binaries. You can also search for content from SDL Tridion Docs, providing federated

search capabilities across both unstructured and structured content. People searching on your website or other digital channels can now find all the relevant information quickly and easily, without the need to perform multiple searches across your marketing content or

in-depth product and service information.

# Content Service

applications.properties

# broker: default

profile to enable broker results

# search: enables search

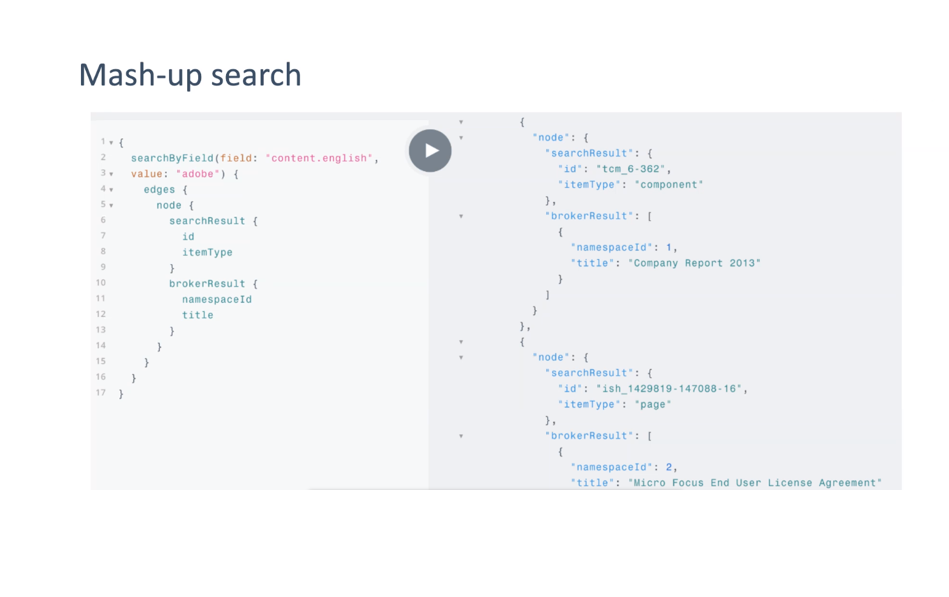

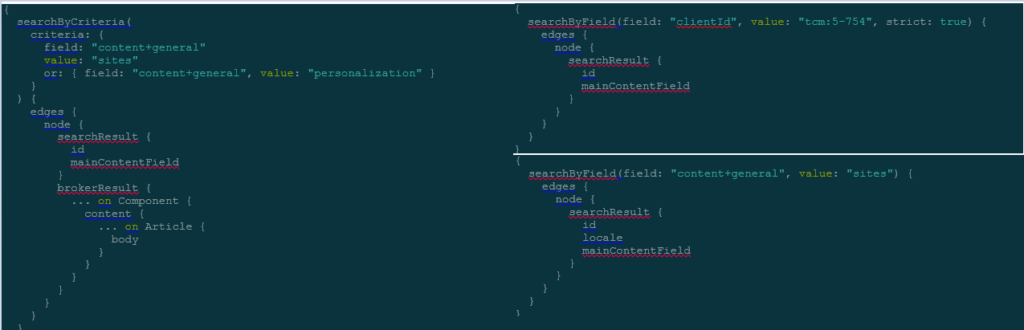

spring.profiles.active=${springprofilesactive:broker,search)GraphQL Search

searchById(indexName,identifier):

[Result]

searchByField(after,first,indexName,field,strict,value,resultFilter,sortBy):

SearchConnection

searchByCriteria(after,first,indexName,field,strict,value,resultFilter,sortBy):

SearchConnection

searchByRawQuery(after,first,indexName,field,strict,value,resultFilter,sortBy):

SearchConnection

SDL Tridion Docs + Tridion Sites

Publishing Model

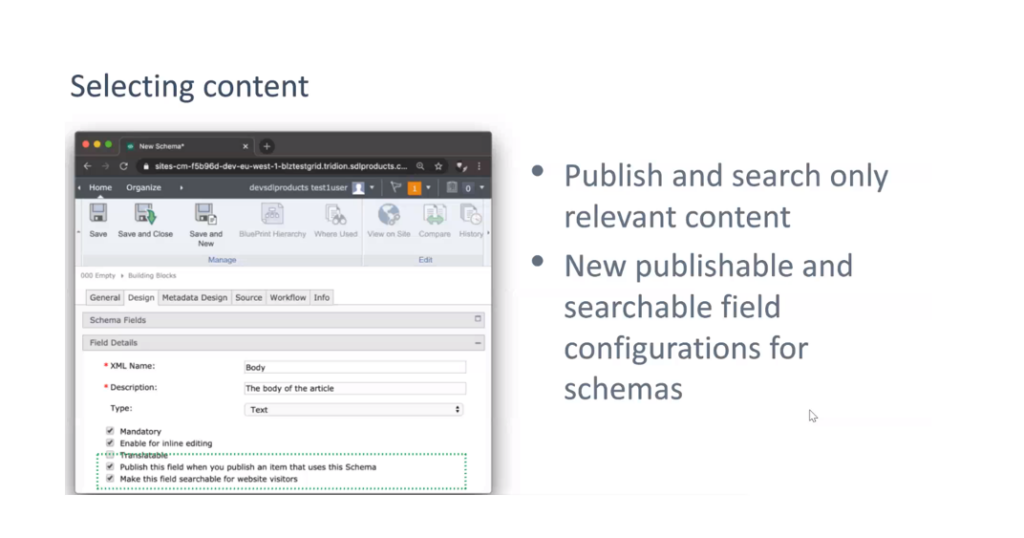

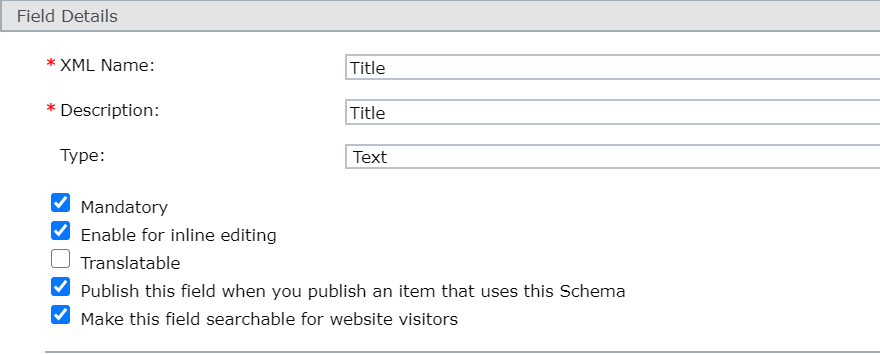

New data publishing capabilities allow you to define – at the content schema level – which fields need to be published to DXD, as well as which ones are searchable. This highly granular control over what data is transferred to DXD adds extra security to your

environments.

Data Publishing

Publish content as structured date ( JSON) next to the existing rendered content

Standard data model – no need in data modifications or custom tem

Benefits of Data Publishing

New field properties for the Schema

Applicable for semantic content models

Each Schema field will have checkbox to specify

General Settings for Schema

ECL data publishing

Template-less publishing: future is near

A new user experience

SDL Tridion Sites 9.5 focuses on delivering major improvements for employees who work in Content Manager Explorer (CME). We have gathered input from a large number of customer interviews, user experience (UX) sessions, and through an extensive pilot period – combined with ideas and enhancements for usability improvements that we collected previously through Support, SDL Ideas, the SDL Community and other channels.

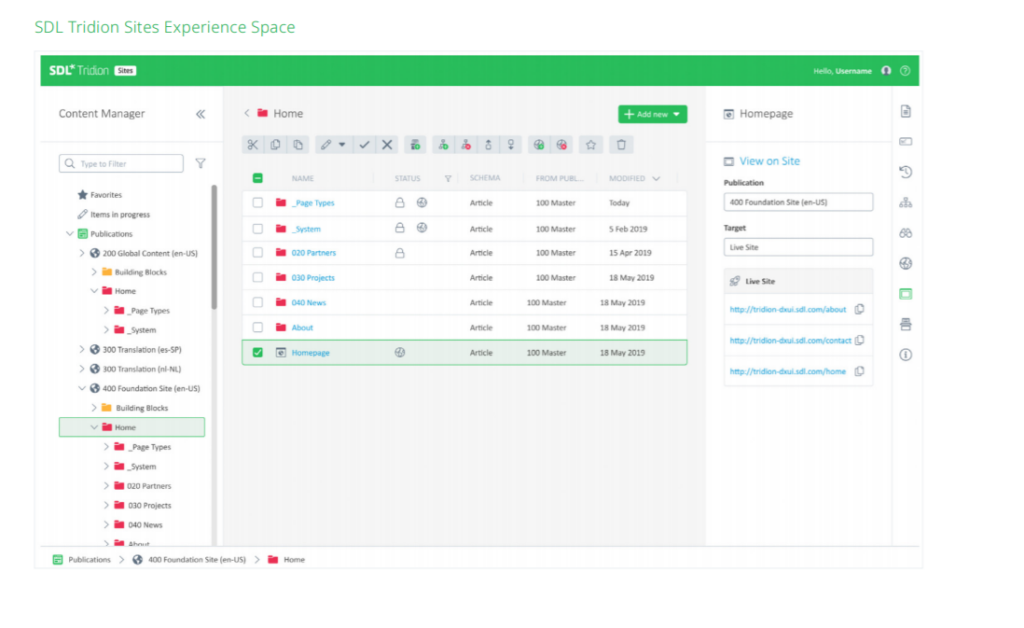

Experience Space – an exceptional new way of working

The name of the new user interface is Experience Space. It’s a workspace that helps you build great experiences faster

and easier, with less clicks and better defaults. It also speeds up onboarding of new employees and reduces

training needs.

Experience Space broadly offers the same capabilities of the current CME – referred to as the Classic UI from now

onwards – but with some important features added, such as an improved BluePrinting® viewer and an in-context page

preview with clear actions and panels.

Besides offering easier content management, it also ships with a brand new Rich Text Editor that improves accessibility

checks, copy/paste and table editing, and support for certain touch-enabled devices.

The new user interface supports your existing page and content models to facilitate a smooth transition. SDL Tridion

Sites 9.5 ships with both the Classic UI and Experience Space so they can be used in parallel.

Dynamic Experience Delivery (DXD)

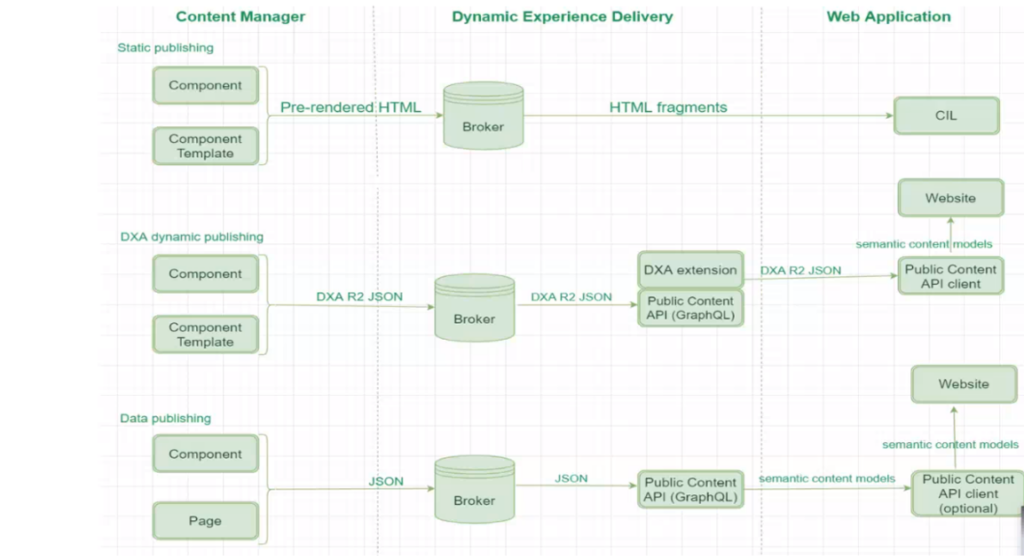

Dynamic Experience Delivery (DXD) is the content delivery component of SDL Tridion. It has been improved in two key areas – querying content through the GraphQL API and

Elasticsearch-based search.

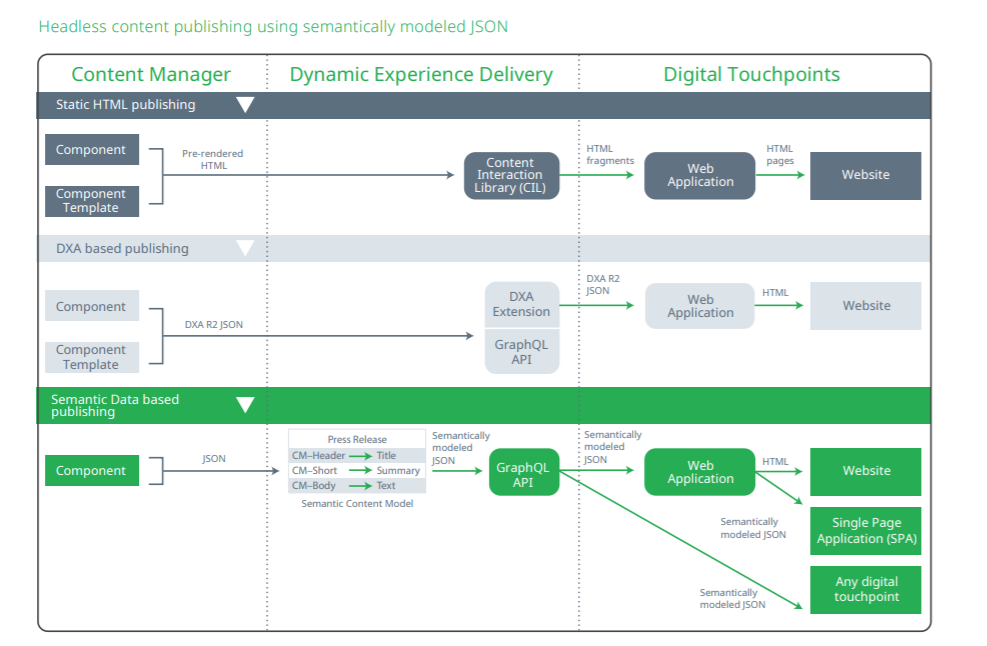

Headless Content Publishing

To make headless content publishing even easier, SDL Tridion Sites 9.5 redefines the way content is published from your content manager to your content delivery (DXD) environments – as well as how it can be queried from there.

New data publishing capabilities allow you to define – at the content schema level – which fields need to be published to DXD, as well as which ones are searchable. This highly granular control over what data is transferred to DXD adds extra security to your

environments.

Content is published to DXD in JSON format for easy headless content consumption. The new JSON publishing (depicted in green below) runs in parallel with your existing template/rendering mechanism, to ensure backward compatibility.

Once JSON content has been published to DXD, a new feature called ‘Semantic Content Models’ allows you to define a mapping between the Tridion content fields and how they are queried through the GraphQL API. This abstraction mechanism removes dependencies

between the two, so that future changes to your content model don’t directly impact your front-end applications. As a result, your front-end developers can build engaging digital experiences more easily, without needing to know the inner workings of SDL Tridion.

Search

SDL Tridion Sites now ships with out-of-the-box support for Elasticsearch-based search for published content, helping you find published content within pages, components and binaries. You can also search for content from SDL Tridion Docs, providing federated

search capabilities across both unstructured and structured content. People searching on your website or other digital channels can now find all the relevant information quickly and easily, without the need to perform multiple searches across your marketing content or

in-depth product and service information.

Since SDL Tridion Experience Optimization uses the same technology, you can also personalize the customer experience across various content types, all using the same technology and infrastructure

footprint.

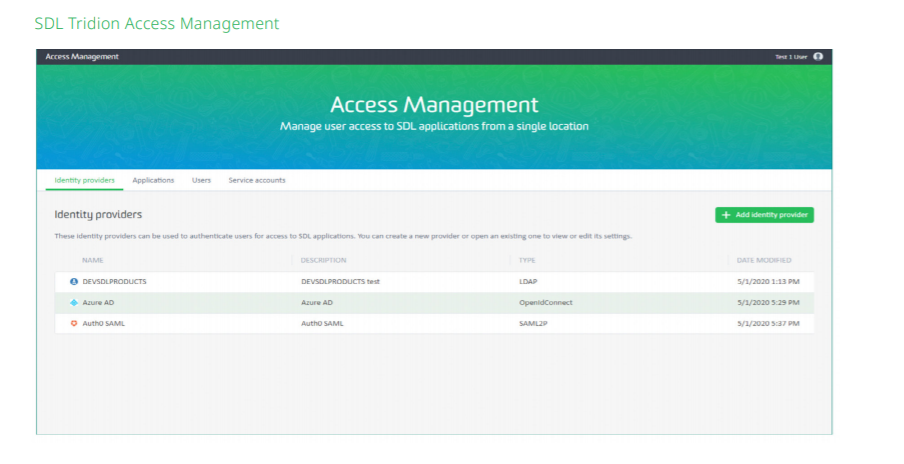

Access Management

A new Access Management UI allows you to configure external identity providers such as Auth0, PingIdentity and Microsoft Azure AD – supporting protocols including SAML 2.0, OpenID Connect, Windows Authentication and LDAP. SDL Tridion also keeps an audit

trail of changes made to identity provider settings and user profiles, so you can always see who has changed what and when.

Summary of features launched per release